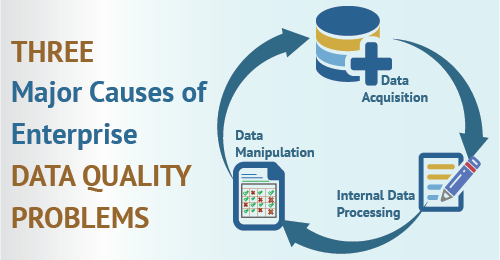

In this blog post, we look at steps you can take to improve the quality of your data and outline an action plan that you can implement straight away!

Download our Data Quality Improvement Checklist to start turning big data into business insights.

Determine what you need from your data and how you will evaluate “quality data”

So by now, you’re no doubt aware that the quality of your data is important. In fact, the quality of your data can even be a competitive differential between other businesses. One of the first steps to take when you’re on the path to improving the quality of your data is to determine how you will evaluate what you deem to be “quality data”.

Data quality can be subjective and will mean different things between different businesses or problem domains. For some, it could mean ensuring that customer contact details are up-to-date in CRM systems. Services like Amazon couldn’t fulfill orders if this information was fragmented or incomplete. For others, it can mean that marketing firms may need to ensure that customer segments have all required data sets needed to help drive ad-tech campaigns.

In a nutshell, the evaluation phase is about determining if the data you have is fit for purpose.

With this in mind, one of the first steps is to determine the business purpose for using your data and aligning your quality checks around that.

From there, you can develop KPIs that are specific and relevant to your organization, which will help you track quality, engagement and any other metrics that you deem to be of interest. This approach will also help you define a clear ROI for your data quality and analytics efforts.

Centralize ownership of your data

By now you’ve determined what markers will signal that you have quality data for your business. Another step you can take to ensure your data management efforts stay on track is to centralize ownership of all data-related activities. Depending on the size of your organization, this may take the form of a CDO (Chief Data Officer) or for smaller businesses and organizations, a DBA can act as the custodian of your data quality.

By assigning a single owner, you’ll benefit from having one person taking responsibility for all data related assets and activities. You might even find that centralizing all data related strategies to one owner will translate into business optimizations and consequently increase profits.

[bctt tweet=”Determine the business purpose for using your data, and align your quality checks around that.” username=”GAPapps”]

Your CDO will need support to deploy their strategies and processes and will need a team of data professionals to help promote their data quality efforts. In doing so, the quality of your data processing will no doubt improve!

Prevent record duplication

Duplication of data costs your business money in various ways. In can take time to identify the correct record, result in confusion and even affect your bottom line! To help you identify duplicate records in any database or IT systems you have, some actions you can take to reduce the likelihood of this occurring are:

- Perform checks when new data is inserted

- Setup triggers or notification when other systems may have inserted a duplicate

- Offer “merging” features to merge old records with new

- Run weekly reports to identify potential duplicates

Deploying some of these strategies will help ensure that you maintain a good level of quality in your databases, software and IT systems.

Download our Data Quality Improvement Checklist to start turning big data into business insights.

Normalize your data

Leading on from our last point is normalization. Data normalization is important, for example, in the following scenario:

You import customer location data from several different 3rd part systems over web services, FTP files which then get ingested and processed as part of your data related activities. You find that address and location data include a variety of different spelling options for the United States such as:

- United States

- USA

- U.S

From our last point, we know that duplicated information is a NO NO! The machine would treat these as three different nations but to the human eye, they clearly point to the same thing! This is where normalization is your friend. As part of your data processing activities, be sure to identify instances like this and map them to one record type, or even better use a database table that contains data which contains unique descriptions for reference data like this.

Normalizing your data will ensure that you keep your data clean and will improve the overall quality of it!

Reduce free-form text

Slightly connected to the last point, but also worth a mention is a free-form text. Web applications and systems around the world often contain functionality that allows users and customers to input data on web forms and thick client applications using many different types of input control. You can increase the quality of your data by reducing the number of fields that lets users input free-form text.

Even the most IT savvy user is going to make mistakes when keying in data. Use coded fields in the form of drop-down lists, radio buttons, checkboxes, list controls and other such things that force the user to pick an option.

Deploying this strategy does a few things:

- Enforces a data quality standard at the point of entry

- Makes your data much easier to query and report over

- Ensures consistency across the board

[bctt tweet=”Even the most IT savvy user is going to make mistakes when keying in data.” username=”GAPapps”]

Be vigilant when integrating new data sources

Integrating data from other systems can be a headache for IT departments and requires extra levels of vigilance. Unique identifiers may differ from system to system, data types may not be supported and so on.

When ingesting data from other databases or systems, take the time to properly map System A to System B to ensure that all data is:

- Correctly mapped

- Correctly converted

- Any exceptions are logged and identified

Do this and you’ll ensure that any data you integrate is complete and plays nicely with your existing system!

Summary

In this article, we’ve looked at some steps you can take to improve the quality of your data. We’ve seen how you need to identify the main KPIs initially and looked at why adopting a sole custodian for all data activities is important.

We’ve looked at why reducing the likelihood of duplicates is important and how normalizing data, and preventing free-form text input are strategies that can help you achieve this.

If you work with data or are thinking about ways to improve the quality of it, hopefully, you’ve got some more ideas now about how you can make this happen.

Here at Growth Acceleration Partners, we have extensive expertise in many verticals. Our nearshore business model can keep costs down whilst maintaining the same level of quality and professionalism you’d experience from a domestic team.

Our Centers of Engineering Excellence in Latin America focus on combining business acumen with development expertise to help your business. We can provide your organization with resources in the following areas:

- Software development for cloud and mobile applications

- Data analytics and data science

- Information systems

- Machine learning and artificial intelligence

- Predictive modeling

- QA and QA Automation

If you’d like to find out more, then visit our website here. Or if you’d prefer, why not arrange a call with us?