Growth Acceleration Partners | December 5, 2025

Founders’ Guide to Scaling Their Tech Teams in 2026

One critical challenge that keeps many tech founders up at night, beyond securing funding, product innovation, and achieving faster time-to-market, is finding, hiring, and retaining the right talent. Although this isn’t a new problem, recent shifts in global policies, rising labor costs, and the rapid pace of AI innovation have intensified it. The demand for […]

Read More

Growth Acceleration Partners | December 5, 2025

AI in Low-Code Platforms: Balancing Speed, Safety and Scale

When it comes to accelerating digital transformation, few trends have reshaped enterprise development as rapidly as the convergence of AI and low-code platforms. Once used primarily for simple workflows and prototypes, today’s low-code tools are infused with generative AI-assisted capabilities. They can now enable entire business units to build and deploy production-grade applications in record […]

Read More

Growth Acceleration Partners | October 29, 2025

The Impact of Trump’s $100,000 Fee for H-1B Visas on Tech Leaders and the Absolute Way Out

Since September 19, 2025, every move to hire international tech talent comes with a staggering new price tag of $100,000 per H-1B petition. That’s six figures gone before a new hire even starts. The announcement is shaking boardrooms and unsettling tech leaders. However, beyond the shock, the pressing question is how many will adjust their […]

Read More

Growth Acceleration Partners | October 14, 2025

AI Governance in Media Analytics: Four Principles That Build Trust and ROI

AI drives much of today’s media insights and audience analytics, yet without oversight, it can just as easily damage trust as deliver ROI. Think about a streaming platform using AI to measure ads. If a brand runs a nationwide campaign expecting broad reach, but the system favors one demographic that clicks more often, the results […]

Read More

Growth Acceleration Partners | October 14, 2025

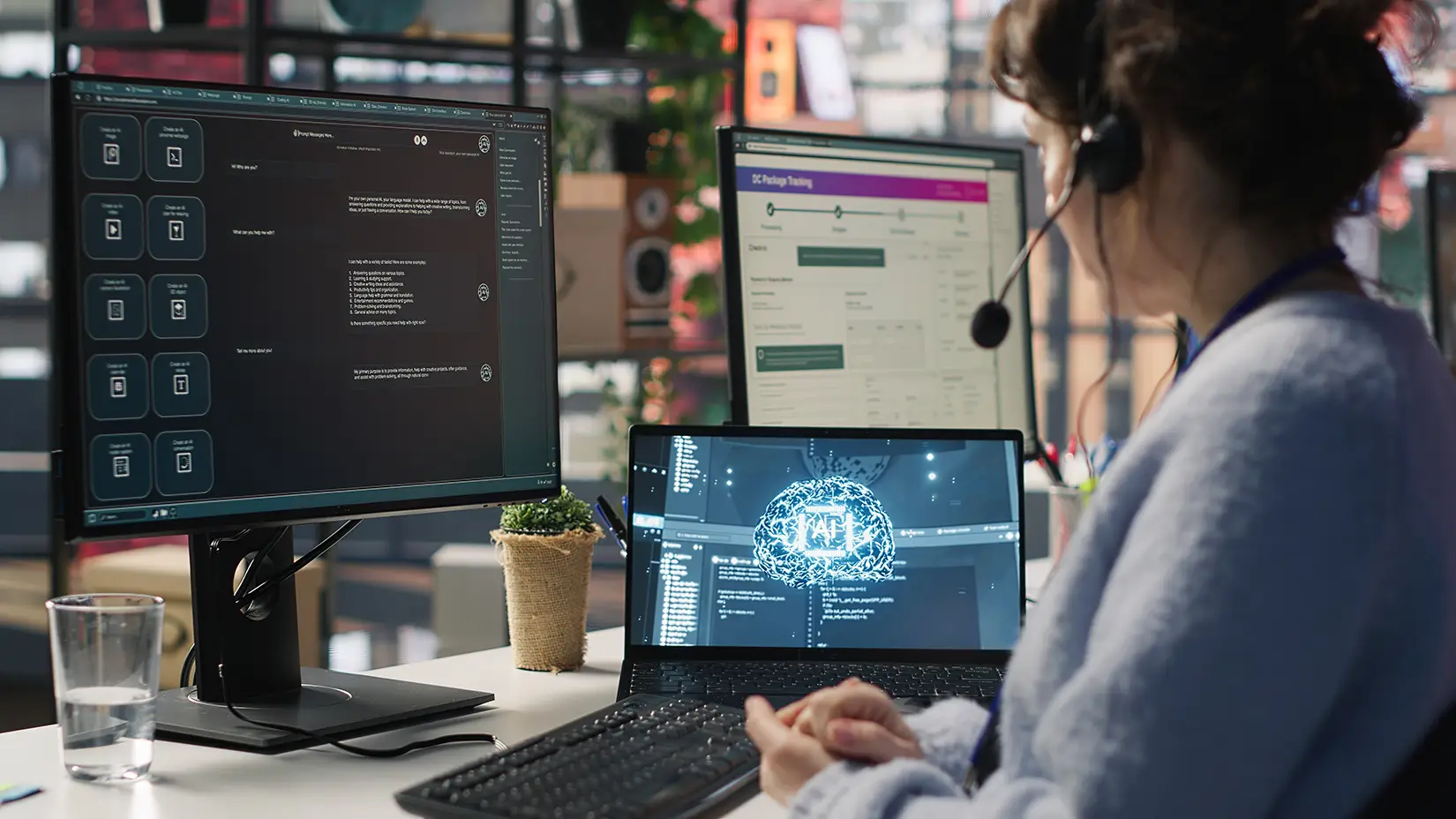

Agentic AI Adoption: What It Is and Why Trust Drives It

In the last few years, AI has helped businesses strengthen efficiency through large language models (LLMs) and automation tools. As the technology grows, AI is beginning to reach a new phase — fully automated virtual agents that aim to perform tasks like a real person would. But this vision will only become a common reality […]

Read More

Growth Acceleration Partners | October 14, 2025

Technology Application Assessment

AI in Education: Building Smarter K-12 Enrollment

If an enrollment portal crashes on the first day of kindergarten registration, parents may have to trek to the district office with stacks of paper forms. Or maybe an administrator spent weeks re-entering transfer requests into multiple systems, only to deal with errors and daily calls from worried families. There are even cases when non-English-speaking […]

Read More

Gabriel Capon | October 14, 2025

AI for Community Banking: Enhancing Security and Personalization

Community banks are under more pressure than ever. While fintech startups and megabank competitors rapidly scale AI in lending, trading and customer service, regulators are also raising the bar for oversight. At the same time, fraudsters now leverage the same advanced AI tools to launch more sophisticated attacks. Unlike national or global banks that operate […]

Read More

Growth Acceleration Partners | October 14, 2025

AI Security and Compliance is the Foundation of Trust in the AI Era

What’s your vendor assessment process for AI security and compliance checks like? If it still means sending out 200+ question surveys every time you bring on a tech/SaaS partner, you already know how draining that can be. The answers often come back vague, exaggerated, and sometimes too generic. As a tech lead, you shouldn’t have […]

Read More

Growth Acceleration Partners | October 9, 2025

How to Get Executive Buy-in for AI

Most executives live by a simple, but firm mantra: protect revenue, reduce risk, and deliver results quickly. That’s why they are quick to shut down even the most promising AI initiatives before you’ve made your case. If you walk into the boardroom talking about algorithms, data models, or technical roadmaps, you’ll lose them. But if […]

Read More

Growth Acceleration Partners | October 8, 2025

AI Mistakes Made in the First 90 Days of AI Adoption (And How to Fix It)

Artificial intelligence promises innovation and faster growth, but only a few organizations see good return on their investment. Studies, including RAND’s review of failed AI projects, reveal a common thread that most teams stumble on in the first 90 days. This early stage is where excitement meets reality. Your choices in those first weeks sets […]

Read More